More Than A Category

A Human Machine Perception Study

In this day and age, neural systems and deep learning shape the premise of most applications we know as

Artificial Intelligence. Such systems are trained on biased datasets and able, among other things, to

perceive, identify and verify human faces in images and videos. Still, they can not understand who we

really are as a person. But do we know who we are?

Our own self-perception is constantly changing and we may not even recognise ourselves in yesterday’s

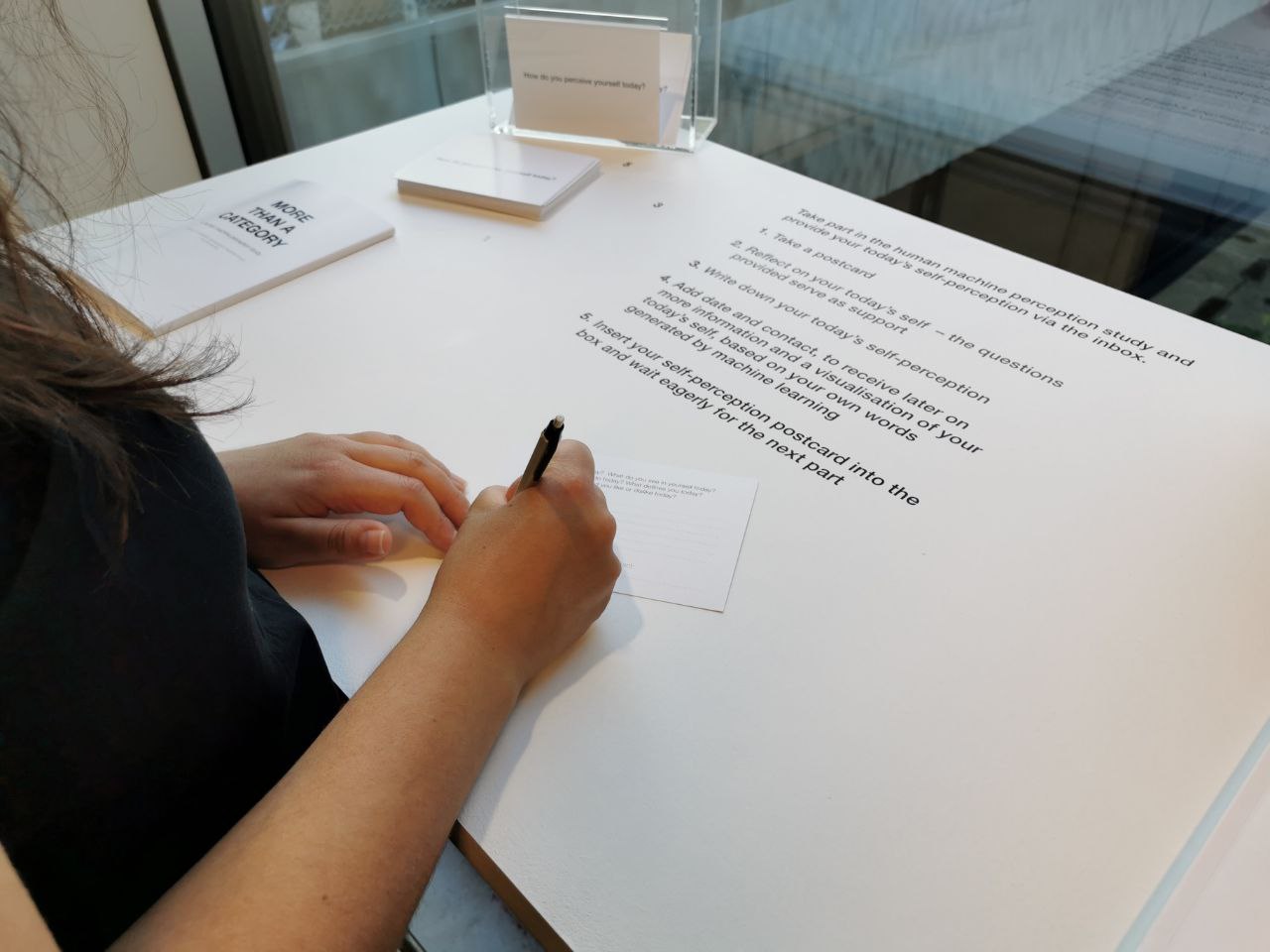

self. “more than a category” explores in a participative approach the possibilities of a text-to-image

neural network, as a tool to visualise and improve one’s daily self-perception.

All Images are created with the open source machine learning programm Aleph2Image, made available by

Ryan Murdock (Twitter: @advadnoun). Alep2Image is a combination of two neural network models by OpenAI,

DALL· E (decoder, encoder) and CLIP and generates images from text input (max. 60

words). Here the input as well the output is based on perception and therefore subjective.

Exhibition: Booklet

ZHdK, Interaction Design, Bachelor Project, 2021

Student: Danuka Ana Tomas (IAD)

Mentors: Dr. Joëlle Bitton (IAD), Stella Speziali (IAD), Florian Bruggisser(IAD)